Bots'-eye view

November 9, 2016

Pratap Tokekar's research explores how heterogenous teams of robotsaerial, ground-based, and water-basedcan sense their surroundings and autonomously decide what kind of robot should be deployed where.

A ground-based robot exploring a room can survey a floor plan and crawl under a table, but it won't be able to see off the roof. By working with an aerial robot, however, the two can collaborate to form a complete map of the area.

"Designing machines that can autonomously explore the environment and coordinate with other, possibly heterogeneous, robots is a notoriously hard problem in robotics," said ECE Assistant Professor Pratap Tokekar.

Heterogeneous systems like these, he explained, force the robots to make decisions about what kind of robot to send to explore which areas, and how much information they need to exchange.

The NSF's Computer and Information Science and Engineering Research Initiation Initiative recently awarded Tokekar a grant to explore this topic, specifically the coordination of different robotic sensors as they attempt to collectively observe an environment.

One of Tokekar's current projects is a collaboration with researchers in plant pathology. He plans to use teams of both aerial robots and robotic boats to examine environmental hazards in water. The aerial robots will look down from above, observing patterns and mapping the contaminated area. The robotic boats will then collect samples from areas specified by the aerial robots. "This could be used for applications like monitoring an oil spill," explained Tokekar.

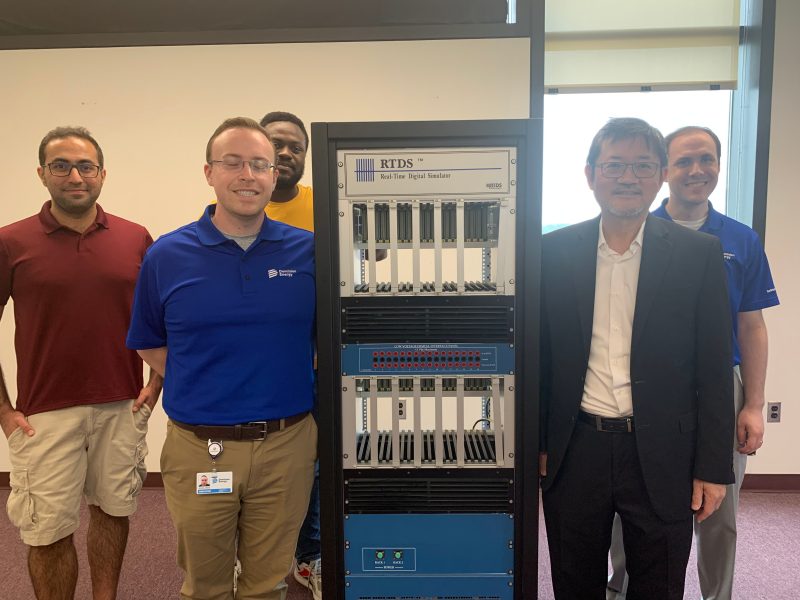

Aravind Premkumar (MSCPE '17 ) and Ashish Budhiraja (MSCPE '17) work in Tokekar's Robotics Algorithms & Autonomous Systems (RAAS) Lab.

This project builds on Tokekar's work as a Ph.D. student at the University of Minnesota, where he cooperated with researchers from the fisheries department to address an invasive fish species. They built and deployed one-meter-long aqueous robots to track invasive carp that were wreaking havoc on local fish populations. The automated fleet of robotic boats tracked and modeled the motion of the fish, and the data they collected will be incorporated into subsequent measures to curb the invaders.

Tokekar has also been researching how robots could be used in precision agriculture. He's been developing robots that regularly collect data on farms. They measure nitrogen content in the soil, map areas for targeted irrigation, and estimate annual harvests to assess the state of a farm.

For a robot to be able to autonomously decide how to behave in situations like these, it needs to make sense of a lot of information from multiple sources, explained Tokekar. Meeting this challenge requires knowledge from many disciplines, including mechanical engineering, electrical and computer engineering, and computer science. "If you can bring in tools and techniques from more than one area, the impact will be so much better," he said. "There is a strong interplay between machine learning, control systems, and robotics."

Currently, Tokekar is working with many techniques from combinatorial optimization, controls, and machine learning. "The robot needs to be able to look at the sensor data, analyze data, take measurements, and view the world the way a human would. This calls for machine-learning algorithms and incorporating computer vision," said Tokekar. "Of course, sometimes its just pure geometry."

One of Tokekar's challenges is balancing his efforts between fundamental research and practical applications. "We have to spend a lot of time getting the robots to work in the environments and conditions we want them to," Tokekar explained, "but since we're working on research, we need to make progress on the overall system. We have to step back and analyze the system in an abstract sense and come up with results that will work independent of the system." Finding the balance between these competing forces, he said, is a difficult task.